We have been hearing a lot of buzzwords like Machine Learning and Artificial Intelligence these days.

The potential and possibilities of leveraging this technology are endless.

The computer vision is one such area in which computers/systems recognize as well as understand images and scenes. It also covers various aspects like image recognition, object detection, image generation, image super-resolution and more.

Object detection is probably one of the interesting topics due to a large number of use cases in a real-life scenario.

Let’s delve a little deeper into it.

Using TensorFlow’s Object Detection API, we can build and deploy image recognition software. I have been playing around with the Tensorflow Object Detection API and found these models very powerful.

The Tensorflow Object Detection API has been trained on the COCO dataset (Common Objects in Context) which comprises 300k images of 90 most commonly found objects. Here are a few examples of it:

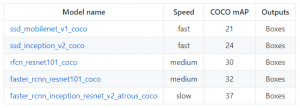

This API provides 5 different models with a tradeoff between speed of execution and the accuracy in placing bounding boxes. See the table below:

In past Object Detection systems would take an image, split it into a bunch of regions and run a classifier on each of these regions, high scores for that classifier would be considered detection in the image, but this involves running a classifier 1000 times over an image, 1000 of neural network evaluation to produce detection.

Speed is very Important

We need detectors running in real time, track objects as we move around and should be robust to a wide variety of changes. That is what TensorFlow lite provides. TensorFlow Lite is suitable for mobile and embedded devices. It lets you run machine-learned models on mobile devices with low latency and use models like classification, regression or anything you might want without necessarily making a round trip to a server.

Currently supported on Android and iOS via a C++ API, along with a Java Wrapper for Android Developers. Additionally, for Android the interpreter can also use the Android Neural Networks API for hardware acceleration, otherwise, it will default to the CPU for execution.

TensorFlow Lite has runtime on which you can run pre-existing models, it’s not yet designed to train models but you can train a model on some other high powered machine, and convert that model to the .TFLITE format, to load into a mobile interpreter.

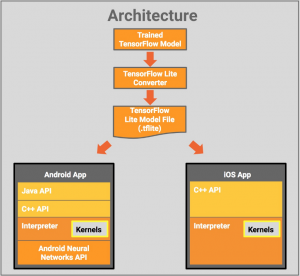

TensorFlow Lite Architecture

High-level developer workflow would be, take a TensorFlow trained model, convert to TensorFlow lite and then update Apps to use TensorFlow interpreter using appropriate API. iOS has an option to use ‘core ML’, i.e take trained model , convert to core ML format using TensorFlow to core ML converter and then use the converted model in core ML runtime.

Now, the question is Why Machine-Learning on-Device?

1. Lower latency, no server calls

2. Works offline

3. Data stays on-device

4. Power efficient

5. All sensor data accessible on-device

Achieving ML on the device is very hard, due to constraints like:

1. Tight memory

2. Low energy usage to preserve batteries

3. Little compute power

But TensorFlow lite has all these drawbacks covered, TensorFlow Lite is smaller than 300KB when all supported operators are linked. These things are achieved by using dependencies like compact interpreter and flat buffer parsing(efficient cross-platform serialization library used for game development and other performance critical apps, flat buffers are flexible, memory efficient). Flat buffers are used during conversion of TensorFlow model to TensorFlow lite.

Apps using TensorFlow lite via ML kit:

Pics Art (collage app)

vsco (photography app)

Applications in Retail and E-commerce

One of the classic use cases is a smarter retail checkout experience.

There has been a lot of buzz around this since the announcement of Amazon Go stores.

In this new age store, you can shop without checkout. With this Amazon Go app, enter the store, buy products you want, and leave the store! No more queue! When you walk out of the store, it will detect products taken from or returned to the shelves and keeps track of them in a virtual cart. A little later, a receipt is sent and the amount is billed to Amazon account.

This experience is made possible by the similar technologies used in self-driving cars such as computer vision, sensor fusion, and deep learning.

I see a plethora of opportunities and use cases that we can use to unleash the power of this technology.

What do you think?